The TL;DR version

Wireframes suck. We should stop delivering them to our clients and strive toward a design artifact that goes beyond a simple prototype, something I call a design model. Think of a design model as a cross between a live, parameterized prototype, a wiki for annotation and commentary, and a documentation database.

The Slightly Longer Version

Instead of wireframes we should dare to imagine a new, better sort of design artifact, powered by a set of tools that bridges the design chasm between the napkin scribble and full-on production code. Today, we fill that chasm with scads of paper documentation populated by simple line drawings with lots text.

This isn’t design. This is paper documentation depicting static poses of a dynamic experince; a 19th centrury answer to a 21st century problem. It is, like the wise man said, dancing about architecture.

Instead of wireframes, we must invent proper UX Design Modeling tools, and deliver a design model of real software to our clients as the engineering precursor to production code.

What Others Have Said

The value of wireframes has been a topic of tweetery and blogmongering the last few years by a growing chorus of eloquent voices: Andy Rutledge, Christina Wodtke, and Jason Kunesh to name just some. Each of these writers is like a gardener lifting out a single shovelful of earth, collectively and perhaps unintentionally diggging a neat little grave for traditional wireframes.

Andy moves the most earth in this task, arguing passionately for several things:

- that wireframes often don’t express what we want (motion, emotion, sound, transitions)

- that they often express things we don’t want (typography, placement, copy)

- that they often confuse clients by foolishly separating visual design from interactive design

- that wireframes are mostly useless for daring, visually-driven designs

- that interactive prototypes are often a better way to express design intent

Yes, to all these things. (Apologies to Andy if I didn’t capture his arguments fairly.)

And To Pile On…

And in my view, that’s just the start. I add:

- wires are brittle in the face of seemingly simple change requests. A single “fix” can ripple work through hundreds of pages of wires because there’s no way to describe relationships between things (“this thing is always x pixels away from that thing”) in simple, non-parametric drawings.

- wires don’t have a graceful path to building prototypes or usability artifacts (building such things often means rework in code).

- wires can be difficult for clients to read properly, prompting questions that wires can’t answer, busting expectations.

- wires don’t age gracefully. Clients often ask that wires be kept aligned with visuals (because clients get confused even if we don’t).

- wires are often impossible for VIP stakeholders to read (but waiting for the full visuals is often too late to take in feedback from this set of stakeholders). We need a way to drive toward tentative visuals early in the process while there’s still time to react to feedback.

- wires can be difficult for designers and clients to annotate as a group. (this is a flavor of the version control problem)

- wireframe documents (Illustrator, Omnigraffle, etc) don’t integrate well or at all with more formal issue tracking tools, useful for the monster-sized projects.

I could go on like this…

And still, in spite of this, clients keep asking for wireframes and we keep obliging. Mostly, I think, because there’s not much we can offer as a better alternative. Our clients deserve a better answer.

This, my friends, my fellow cap-D-Designers, is a Design challenge. Perhaps a challenge that threatens to redefine our craft. Fine by me. Bring it.

Sketching Is Still Important

I want to be clear: sketching static wireframes with pen/paper/postits and whiteboards is still necessary as an internal communcation tool and “thing to think with” a way to solve problems – the cocktail napkin still has a role to play in our design process. I am arguing that our design thinking should be delivered to clients in something other than fancy napkins.

Modeling Tools for UX

If wireframes are to die as a deliverable, we have to answer the softball question: what would be better than wireframes?

Our designed objects are living, breathing systems, our deliverables should be too. We should move away from documents and toward the notion of a tool. This tool isn’t a programming tool, but has aspects of it that look like code. It isnt a drawing tool, but has parts that look like common drawing tools and can consume assets from common drawing packages. It’s much more of a “what-if” engine – a thing that lets you noodle with options as you go. It’s parametric, so you can plug in variables and watch the design change.

Like CAD for UX. More than drawing. Less than programming.

I’m talking about a modeling tool for user experience.

A Single System, Many Views

This modeling tool is one that supports the construction of a single, integrated design artifact, interconnecting logical screens with visuals, transitions, audio events, annotations, review notes, data feeds, and business logic. Parts of it are code, to be sure. Parts of it are drawings. Parts are excel spreadsheets. Parts are driven by live web services.

It allows for progressive refinement of a design and, when released to a client, acts as a living, breathing spec and reference prototype all in one. The databases that contain the information can be brought togehter to create many different views, different design artifacts that help express Design Intent.

The first core idea is that the model can emit many renditions depending on the need. The skin can start as hand-drawn sprites, and move to hardline vectors or visually-realized designs as they become available. Pieces get augmented logically, never redrawn. Visuals rise in fidelity, but can always be rolled back to monochrome/line drawing versions to emphasize behavior over visuals when that’s necessary. Transitions can be layered into the system in place. This is about progressive refinement, not re-work.

Ce-ci n’est pas (de) Rapid Prototyping

[tip of the hat to friend @maartend for fixing my pseudofrench]

OK, so far this just sounds like rapid prototyping. That’s half of it, but perhaps the less interesting half of the Design Model. This is about blending the interactive prototype with a rich annotation engine that allows designers to add a metadata, a conversation about the prototype that is as interactive as the prototype itself.

That’s the second core idea: the running model can be annotated. It can support callouts with labels and these callouts themselves can be video, voice over, text, links to other assets or pictures. The callouts can be data-driven from the behavior of the model (“when the <persona x> clicks on the <button youjust pressed> they see <screen Y>”).

These callouts can serve as the official documentation for the model (like a traditional wire), or review commentary from the team or the client. The wireframe, standing upright, opposable thumb firmly wrapped around a better tool, all evolved and ready to kick some static documentation ass.

What a Design Model Supports

With this basic idea in mind, think about what a Design Model might look like:

Authoring Side

- it has an authoring side that looks like a drawing program, supporting system construction both in terms of interaction models, template pages, but visual systems of colors, type, lockup and gridlines.

- it encourages visual designers and interaction designers to solve the problem together by giving them access to the same tool.

- it lets designers express transformations and transitions between static states and to get that information into the system early, not late.

- it has a ready-made set of common, mock databases/feeds to supply things like address books, contact cards, sample SMS messages, emails, videos etc..that feed the user experiences we make. Of course, there’s an extension mechanism to create custom collections.

- it has a way of expressing external events that exist outside the software experience – friends coming online, dropped calls, page not found, etc. Again, the event model is extensible as new interaction models are everywhere.

- it uses instances everywhere: any logically unique object in the experience is instanced out of a symbol library or template. These masters are parametric and referenced – changing them cascades changes all through the system. This approach enforces consistency and is able to produce, as a side-effect, a list of every dialog, every control, every animation, every cut asset and every variant or exception to the system. This modular and abstract way of working will be a new challenge for designers, but a challenge that we are ready for, indeed, begging for.

- it supports the construction of a glossary of terms – every project I’ve ever been on has created or used words that were wholly new or unique to the client.

- it supports writers with spellchecking, layout, consisency-checking tools. Ideally, it would have a workflow mechanism to support the traditional writer-editor-proofer cycle.

- redlines, if they exist at all, should be expressible by the system automatically, or with very little user-input, much like modern CAD software dimensioning.

Playback/Review Side

- it allows the connected screens of visualized wires to play out for stakeholders, usability subjects, design teams doing internal critiques.

- Screens can be collected into use cases that are reflected and cross-referenced to business requirements, but they don’t exist as context-free drawings, but logical states of the model.

- Annotations and callouts can be turned on and cross-referenced with business requirements, appearing as a side-bar to the running model, or in a separeate rendeirng layer.

- Reviewers can leave notes on any screen, in any state of the model.

The Design profession is decades old now. We have a much clearer picture of what we need – it’s about time we have some Computer-Aided Modeling tools that directly support and automate what we do.

But Don’t We Already Have…?

No. Not really. The pieces are in place, but the Computer-Aided Modeling solution eludes us. I hope the picture I am starting to paint here makes it clear that the possibilities are beyond what any of our tools provide to date.

Illustrator, InDesign, Omnigraffle, Visio, Catalyst and the like are not Computer-Aided anything. They’re just making drawings with computers. They really don’t aid us at all. Yes, I know about symbols and the click-through and interactive features of these packages, but this is pretty weak sauce and falls short of a full-credit modeling solution.

Microsoft Expression Studio is getting closer to the mark, but it is a Silverlight/ .NET Microsoft-centric beast of a system and is sitll incomplete.

Axure and iRise get other pieces of this vision right, emitting requirements docs derived from the wires, but they’re still very wire-framey in nature and not parameterizable and customizable enough to capture what I’m talking about. I will admit that my view of these two tools is possibly stale and I would invite others with more experience to chime in with their experiences.

Other Designers Are Thinking About This

Turns out there’s an analogous conversation going on in the world of architecture – a growing interest in BIM – Bulding Information Modeling that combines the graphical depiction of a blueprint with backend data that allows designers to estimate building material and schedule costs as changes are made to the blueprint. This is clearly a higher-order thing than just a blueprint.

Where We Go From Here

I want conversation, questions, debate and a clearer picture of what this tool might be and how existing tools do and don’t quite realize this vision. I want specifically to talk about how a tool like this can get us to work in a more high-fidelity visual language earlier than we ever thought possible. We owe it to our clients to speak to them in a visual language that speaks to both the head and the heart.

I’m convinced there’s something more we can do than the current state of the art. We can and must do better.

We must dare to be great. We must dare to imagine tools that are as supple, expressive and reactive as our imaginations can allow. We must listen to ourselves as our own customers and step forward with a proposal for higher-order design and production tools.

Then we must stop creating wires.

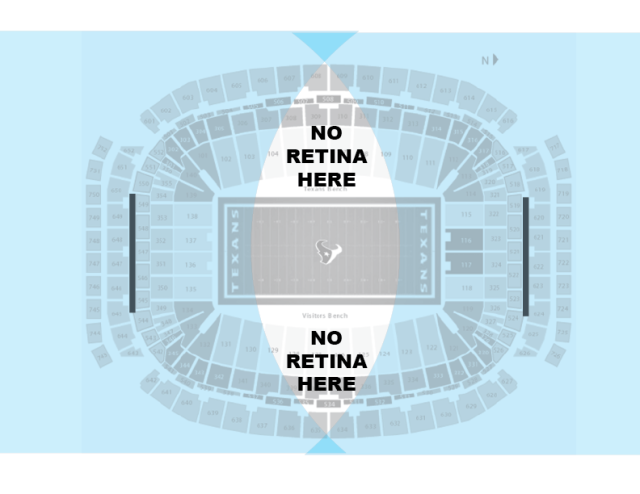

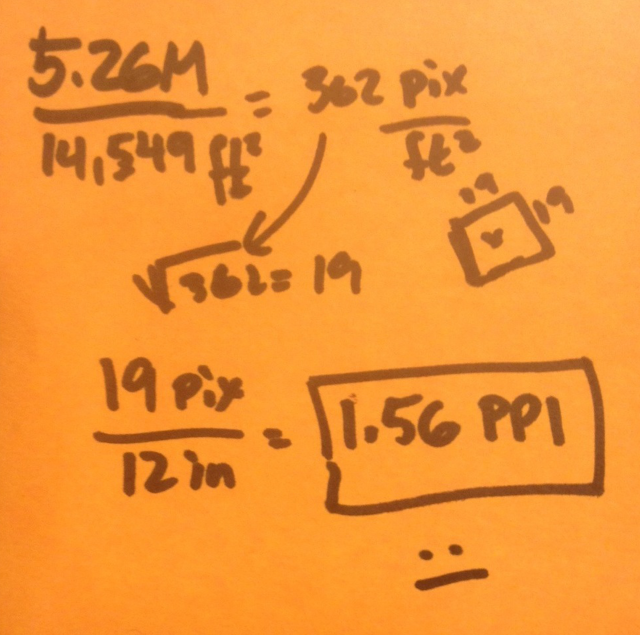

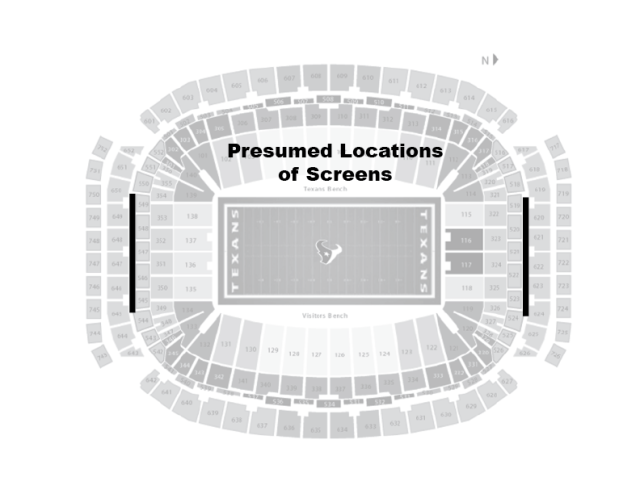

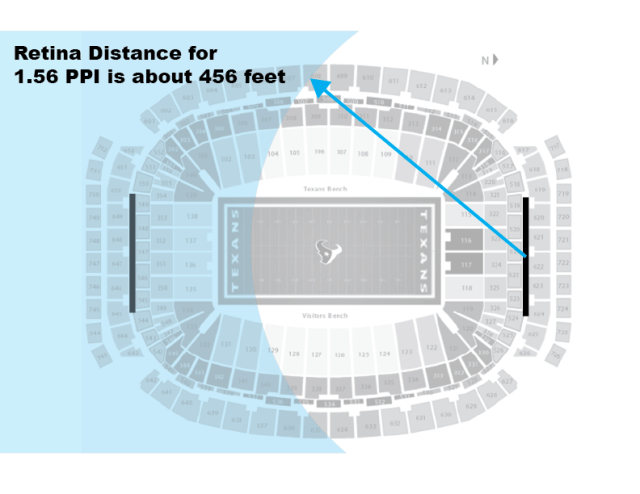

We all know that Apple coined the term “Retina Display” to describe their high density displays for iPhone. The screens for mobile devices at the time were hovering in the 120-150 range for most handsets, while the retina screen for the iPhone clocked in at an impressive 326 PPI (pixels per inch), it was heralded as a great breakthrough for visual fidelity and accuracy.

We all know that Apple coined the term “Retina Display” to describe their high density displays for iPhone. The screens for mobile devices at the time were hovering in the 120-150 range for most handsets, while the retina screen for the iPhone clocked in at an impressive 326 PPI (pixels per inch), it was heralded as a great breakthrough for visual fidelity and accuracy.